Python + Technical SEO: 4-Step Checklist to Leverage This Integration

Python is a popular programming language offering a simple syntax, enhanced readability, and access to several modules and libraries. It has gained much traction in the tech industry that even SEO professionals embrace Python’s automation and other capabilities to enhance their tasks.

This powerful, versatile programming language can empower technical SEO to build web crawlers, automate tasks, and gather and process data to gain insights into search engine performance. It can help with keyword research, analyse ranking factors, and optimize content for improved search engine visibility.

The easy-to-use syntax and huge library make it a desirable language for technical SEO experts to learn and use for everyday SEO tasks.

Now, let us understand how SEO experts can use Python at its best.

#1. Start using it for log file analysis

Log files can help your SEO teams understand how the website performs. You can get a comprehensive overview of website requests and fetch data like URLs, date and time of the request, user data, HTTP response status code, etc.

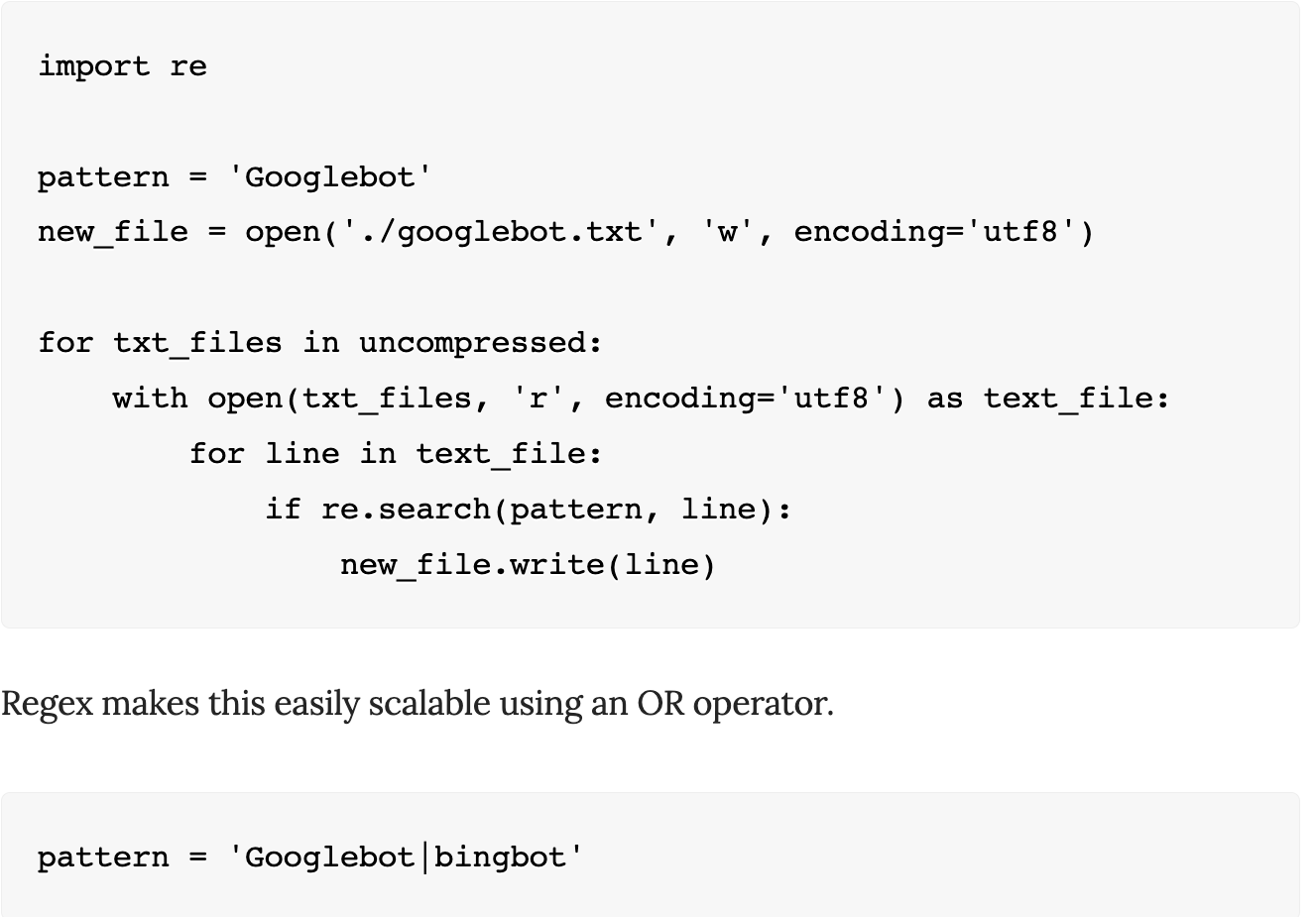

The technical SEO team can analyze log files to identify potential issues like return errors, slow page loading, 404 URLS, pages not crawled, etc. Such analysis provides insights into website security and user activity. When requesting log files over a long time, you’ll get different file types like .log and .gz.

Use the open() statement to access the .log files while the gzip library is resourceful with the gzip.open the (‘file.gz’) command and start accessing the .gz files.

Python comes in handy in splitting the data using the .split( ) approach to parse the data in a more readable format since long files often have inconsistencies. For instance, the format can remain the same, but the server logs actions can omit a specific element of the file itself)

Address this using the log_parse() function that leverages a series of regexes to identify the relevant elements needed for SEO analysis. This ensures that log files are readable, more accurate, and consistent.

#2. Create web crawlers

Google suggests website speed is important in enhancing the crawl budget. This requires optimizing images, minifying scripts, and reducing redirects. Moreover, rectifying soft error pages and eliminating low-quality and duplicate content can also positively affect the crawl budget. Therefore, regular website crawling becomes an important factor for SEO professionals.

Technical SEO experts can create web crawlers using Python for search engine optimization. Web crawlers are computer programs that browse the Internet (World Wide Web) in an automated manner by visiting web pages, reading their contents, following links to other web pages, and repeating the process until a given set of web pages is indexed. It helps further discover the ranking effectiveness of SERPs.

For instance, a web crawler enables technical SEO personnel to identify which websites are linked to a certain page or which pages regularly utilize a particular keyword. This information, in turn, helps determine which pages need optimization or backlinks to improve SERP features.

Let’s get started with quick steps to creating web crawlers using Python —

- First, decide on the web crawler you want to build — the site or the link crawler. A site crawler searches HTML content for specific text or elements. At the same time, a link crawler follows all links on a page and visits each to find more links.

- Next, write the code for the crawler. Python has several libraries that can be used for web crawling, such as Scrapy and BeautifulSoup. These libraries provide functions and classes that simplify automated web crawling and extracting information from the HTML.

- Last, set up the crawler. This involves defining which pages the crawler should visit and how often. Also, specify rules for what types of pages should be crawled and what information should be extracted from the HTML.

#3. Use the link status analyser

Add another armory in your kitty with pylinkvalidator — a standalone and pure Python link validator and a crawler that crawls your website to provide error reports. It works by crawling your website and analyzing the status codes of all the URLs it finds. URLs with error codes like 404 or 500 will be flagged to investigate the cause of the issue further.

Moreover, SEO teams can use it to speed up the crawling by installing the following libraries:

- lxml — Fastens the HTML page crawling (may need C libraries).

- Event — Helps pylinkvalidator use green threads

- cchardet – Speeds the document encoding detection

As mentioned earlier, scripts enable an entire website to crawl and analyze each URL’s status code. This may take a long time for larger websites. Therefore, try using additional libraries to speed up the process.

Use the -P or—-progress option when crawling, which helps gauge the crawl’s progress without any visual signs. Additionally, the—-workers=’number of workers’ command can help crawl more threads and processes with the—-mode=process—-workers=’number of workers’ option.

#4. Automate repetitive tasks

Python can do wonders for the SEO team on the automation front. The Requests and Beautiful Soup libraries can help it automate tedious SEO tasks.

Requests are useful for making web requests, while Beautiful Soup helps parse web pages’ HTML content. Combined, these libraries can create web crawlers that traverse websites and assemble information for SEO assessment.

For example, a web crawler could crawl the website to provide the number of incoming links, anchor text used within those links, domain authority of the connecting website, etc.

Furthermore, Python can generate scripts to keep an eye on SEO metrics. For example, SEO experts can leverage a Python script to monitor keyword rankings over time or observe any changes in organic traffic sourced from search engine result pages (SERPs).

Another way to use Python is to automate multiple tasks like generating monthly reports, link-building activities, and checking for broken links. Moreover, a Python script can scrape websites for guest post opportunities and provide necessary contact information to send outreach emails.

#5. Optimize your website images

Images are important elements in making your website content engaging. But they come at a cost—more space and time (to process for crawler and load).

For instance, one JPG image may take 1MB. Four images can take up an entire page and, when coupled with the site structure and text, a whopping 6 MB. This can be a problem when a user tries quickly locating desired information on a slow connection. Compressing the images is possible, but manually compressing hundreds of images and placing them back into hundreds of pages can be tedious and time-consuming.

Here, Python can be used as it can compress images automatically. You’ll need a library like Pillow, a fork of the Python Imaging Library (PIL). With Pillow, you can easily compress images in bulk and optimize them, making it easier to manage large websites. Here’s how to use Pillow.

Depending on your batch processing settings, adjust the size of individual images or the entire website. While this could reduce the size to 400 kilobytes, the image quality would suffer. Nevertheless, it would result in faster page loading.

Alternatively, you can use this optimized image application on Github by Victor Domingos.

Wrapping up

The use cases mentioned above show that Python can be a powerful tool for SEO teams. Moreover, SEO experts can leverage Python’s active community of developers who are always willing to assist when needed, making it an increasingly popular choice for SEO professionals.

Since these use cases are not limited, SEO teams can greatly enhance productivity by allowing users to focus on finding solutions instead of merely identifying problems.